Neural Lens Modeling

Abstract

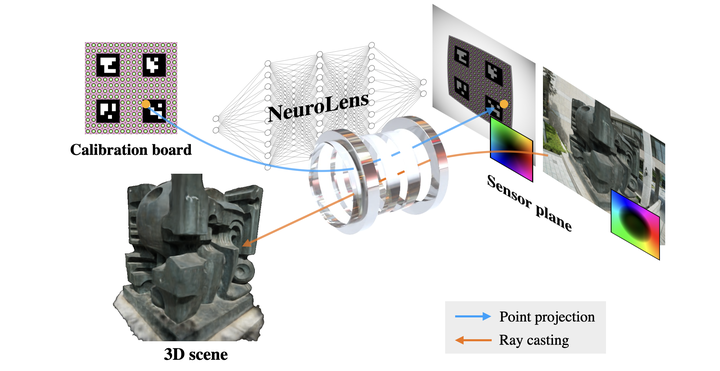

Recent methods for 3D reconstruction and rendering in creasingly benefit from end-to-end optimization of the entire image formation process. However, this approach is currently limited: effects of the optical hardware stack and in particular lenses are not being modeled in a differentiable way. This limits the quality that can be achieved for camera calibration as well as the fidelity of results of 3D reconstruction. In this paper, we propose a neural lens model for distortion and vignetting that can be used for point projection and raycasting and can be optimized through both operations. This means that it can (optionally) be used to perform pre-capture calibration using classical calibration targets, and can later be used to perform calibration or refinement during 3D reconstruction; for instance, while optimizing a radiance field. To evaluate the performance of the proposed model, we propose a comprehensive dataset assembled from the Lensfun database with a multitude of lenses. Using this and other real-world datasets, we show that the quality of our proposed lens model outperforms standard packages as well as recent approaches while being much easier to use and extend. The model generalizes across many lens types and is trivial to integrate into existing 3D reconstruction and rendering systems.