Christoph Lassner

Biography

I am co-founder of World Labs, the Spatial Intelligence company.

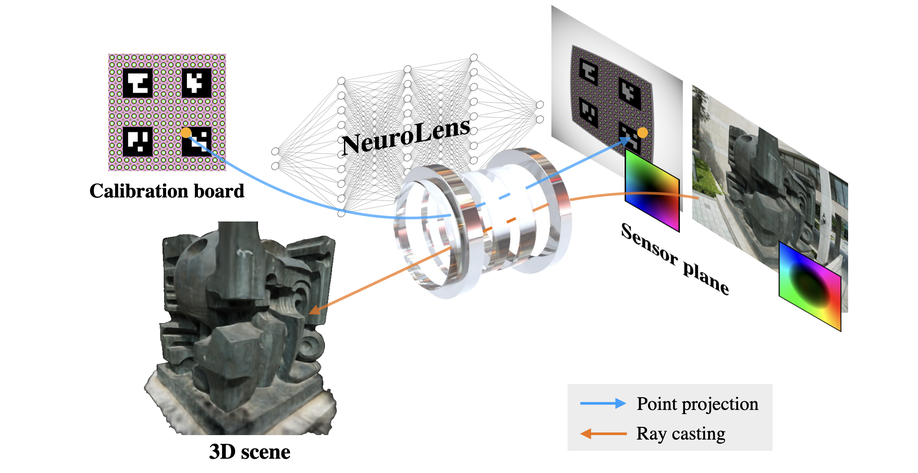

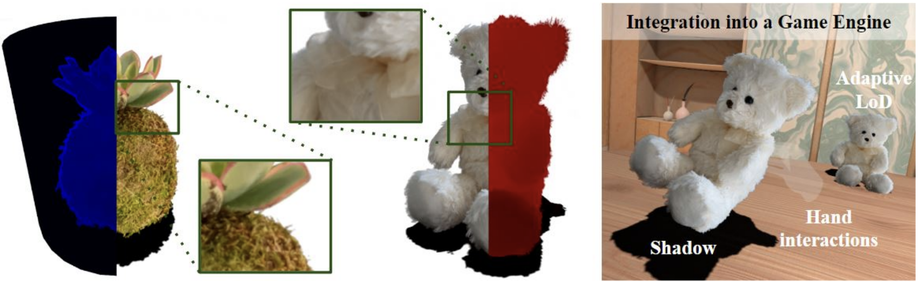

I am deeply curious about how we can build interactive virtual representations for the physical world that can be optimized and rendered efficiently to faithfully match our perception (visual and beyond; see for example Neural Assets, VEO, Neural 3D Video, NR-NeRF). A lot of my work focuses on perception and rendering systems for humans, see for example TAVA, HVH, ARCH, NBF, UP. I created the human pose estimation system for Amazon Halo, which is part of a system to create a 3D model of your body using your smartphone. My team’s work at Meta on reconstructing and rendering radiance fields interactively was featured at Meta Connect 2022 and on CNET (co-presented with Zhao Dong et al.’s work on inverse rendering).

At the same time, I am very interested in the engineering challenges such systems create and was awarded an Honorable Mention at the ACM Multimedia Open-Source Software Competition 2016 for my work on decision forests. In 2021, I wrote the Pulsar renderer (now the sphere-based rendering backend for PyTorch3D) and would love to find better ways to use low-level autodifferentiation on GPUs.

Previously, I was leading research teams at Epic Games and Meta Reality Labs Research; before joining Meta, I worked at Bodylabs, a startup that was acquired by Amazon. I completed my PhD at the Bernstein Center for Computational Neuroscience and the Max Planck Institute for Intelligent Systems in Tübingen.

If you would like to work together on challenges at the intersection of machine learning, computer graphics and computer vision (from modeling to engineering), please reach out!